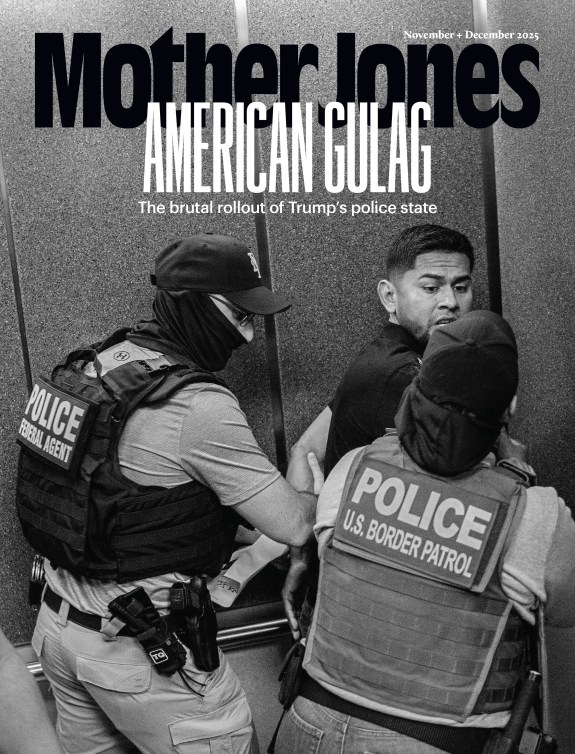

A country riven by ethnic tension. Spontaneous organizing fueled by viral memes. Hateful fake-news posts about “terrorism” from marginalized groups inciting violence and riots.

It’s a story we’ve seen play out around the world recently, from France and Germany to Burma, Sri Lanka, and Nigeria. The particulars are different—gas prices were the trigger in France, lies about cannibalism in Nigeria—but one element has been present every time: Facebook. In each of these countries, the platform’s power to accelerate hate and disinformation has translated into real-world violence, from riots to murder.

Americans used to watch such horrors with the comforting conviction that It Can’t Happen Here. But we’ve learned (if we needed to) that the US is not as exceptional as we might have wanted to think, nor are contemporary societies as protected from civil conflict as we’d hoped.

Just picture a reasonably proximate scenario: It’s the winter of 2020 and Donald Trump—having lost re-election by a margin closer than what many people expected—is in full attack mode, whipping up stories of rampant election fraud. Local protest groups organize spontaneously, coalescing around Facebook posts assailing liberals, Muslims, feminists. (This is exactly what happened in France this year, a phenomenon known as “anger groups” that birthed the yellow vest protests.) Pizzagate-style conspiracy theories race through these groups, inflaming their more extreme members. Add a population that is, unlike those of France and Nigeria, armed to the teeth, and the picture gets pretty dark.

In other words: Facilitating the election of a demagogue committed to stoking racial prejudice and enriching his family may not yet have shown us the worst dangers of social media in a divided society. And if we don’t get a handle on the power of the platforms, we could see the worst play out sooner (especially with a president ever more unbound from reason) than we think.

This may sound grim, but it’s not intended to leave you stockpiling canned goods or looking up New Zealand immigration law. It’s aimed to ratchet up the urgency with which we think about this problem, to bring to it the kind of focus we brought to other times when a single corporation—Standard Oil, AT&T, Microsoft—controlled key infrastructure to an extent that gave it unacceptable power over society’s future.

In the case of social platforms, their power is over the currency of democracy: Information. Nearly 70 percent of Americans say they get some of their news via social media, and the real number is probably higher. That’s a huge shift not just in terms of distribution, but in terms of quality, too. In the past, virtually all the institutions distributing news had some kind of verification standards—even if thin or compromised. Facebook has none. Right now, we could post an entirely fake news item and, for as little as $3 a day to “boost” the post, get it seen by up to 3,400 people each day, targeted for maximum susceptibility to my message.

It’s not that Facebook isn’t capable of suppressing harmful content: For years it has used artificial intelligence to block posts with nudity from spreading, and it claims to have had success blocking terrorist messaging too. (Mark Zuckerberg recently wrote a lengthy and pretty interesting post on this AI work.)

The problem, as Zuckerberg acknowledges, is that ferreting out disinformation is much harder than spotting boobs or ISIS recruitment. That’s because the whole point of propaganda—getting emotionally resonant content in front of the right audience—is not a bug in Facebook’s technology: It’s the core of its model. (It’s also the core of Trump’s model, which is what makes for such dangerous alchemy.)

A couple of weeks ago we wrote about how this mechanism has been toxic for journalism in a very particular way, by incentivizing newsrooms to feed Facebook’s algorithm. First, that meant clickbait; then, when advertisers grew tired of the churn and burn (detailed by a former Mic editor here) Facebook pushed for the fallacious “pivot to video.” Next, stung by bad PR over fake news, it cut back on showing users news, period, and instantly vaporized a lot of the revenue base for journalism. (It’s no accident that this month, Verizon revealed that its digital media division, which includes AOL, Yahoo, and Tumblr along with journalism shops like HuffPost and Engadget, was worth about half what it paid for those companies. RIP the dream of “monetizing audiences at scale.”)

These developments have not been catastrophic for Mother Jones the way they have been for some publishers—advertising revenue makes up less than 15 percent of our budget, and many readers are committed to MoJo no matter the platform—but they do cause some pain. Several million people a month no longer see our stories because of these Facebook changes, and we’ve calculated that the decline in annual revenue is north of $400,000.

(Interestingly, not only is Facebook sending less of an audience to publishers, it’s also making those users less valuable financially: The revenue Mother Jones gets each time the Facebook app loads an ad in one of our stories has fallen by nearly half over the past year.)

Opening our books this way is a little scary, but it’s important at a time when too many news organizations are forced to keep up a cheerful facade for investors. And, it turns out, it resonates with readers too: More than 7500 people have pitched in to help with our end-of-year reader support campaign, and many more have shared our story.

But asking for your support, as important as it is, is only a small part of the reason why we keep hammering on this issue. The far bigger one is the danger ahead as the political and media environment becomes ever more volatile. And that danger is intensified because—as we’ve learned, once again, in the last couple of weeks—all the economic incentives are lined up for Facebook and the other platforms to continue monetizing our personal information—at the expense of democracy.

Just the last few weeks have brought remarkable revelations on this score, though they got swamped to some extent by the chaos emanating from the White House. To wit:

- The British parliament released a trove of internal Facebook emails that provide a fascinating glimpse inside the senior team in Menlo Park—including their obsession with “ubiquity.” Zuckerberg’s vision for the company, the emails make clear, has been to make sure Facebook is everywhere, all the time. To that end, he struck deals in many countries, especially the developing world, so that the Facebook phone app could access the internet with no data charges. This has meant that for many millions of people Facebook is the internet.

- Nigeria was one of the early targets of the ubiquity push, and the BBC uncovered one of the effects: A tide of fake news and hate speech that has led to multiple politically motivated murders. Facebook has opened up “a new realm of warfare,” one Nigerian military official told the BBC. “It’s turning one tribe against another, turning one religion against another,” warned another. “It has set a lot of communities backward. In a multi-ethnic and multi-religious country like ours, fake news is a time bomb.” (Sound familiar?) In response, Facebook has launched a digital literacy program that partners with 140 schools—about 0.25 percent of the schools in the country.

- As late as the summer of 2018 (!) Facebook was letting Yahoo access your friends’ posts without telling you; allowing Spotify and Netflix to read your messages without your consent; and giving Apple access to contact and calendar info even when you had specifically disabled data access. It was doing all this despite having been embarrassed, dragged before Congress, and excoriated by users for exactly this kind of breach of trust. (Remember how in April, Mark Zuckerberg promised us that we had “complete control” over who could view our information?)

- Russian influence operations in 2016 used Facebook targeting in even more relentlessly than was previously known. In particular, fake accounts operated by the Kremlin-connected Internet Research Agency pushed to suppress votes in African-American communities, using both Facebook and (Facebook-owned) Instagram with accounts such as @blackstagram. And these Russian troll accounts didn’t slow down after the election, they doubled down on Trump. As a pair of new reports for the Senate Intelligence Committee notes, the IRA sock puppets have been especially obsessed with the Mueller investigation, claiming only “liberal crybabies” would worry about Kremlin interference. Recently, Russia’s disinformation agency, RT, has gone after Mother Jones too—in classic form, seeking to sow confusion and discredit reporting.)

- Facebook’s VP of global policy, Joel Kaplan—whom you may remember for prominently showing up at the Kavanaugh hearings to support his friend Brett—has increasingly had a hand in Facebook projects that could be seen as offending conservatives. Among other things, the Wall Street Journal’s Deepa Seetharaman reported that Kaplan helped kill a project to help people communicate across political differences, challenging whether discouraging “hateful” and “toxic” speech was a good idea. He also supported a project to allow prominent pages, such as Donald Trump, the extremist group Britain First, and publishers from the New York Times to Breitbart to post content that users might flag as hate speech.

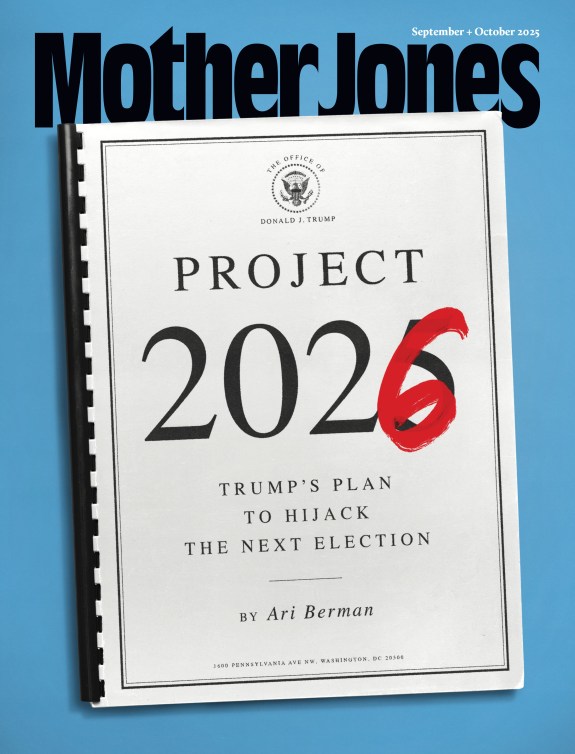

The list goes on, but you get the picture. Demagogues, haters, and spies know how useful Facebook and the other platforms are for undermining democracy. They are ramping up for 2020, and the platforms will not stop them. So who will?

2019 could be a decisive year on this front. For the first time, congressional investigators will truly scrutinize Donald Trump, including the way Facebook facilitated his election. But the platforms have powerful lobbying machines, and they will work hard to tamp down any questions about their oligopoly.

Will we see real hearings about their power? Will the press—so dependent on these companies for revenue and reach, even now—take them to task? Will the rest of us take a hard look at the free services that we pay for with our privacy?

It was always futile to rely on the platforms to make these kinds of decisions for us, but it’s become truly dangerous to continue doing so. In the pursuit of scale, ubiquity, and shareholder value, there’s not much room for a moral compass. The choices are ours to make, and—with only 22 months until an election that could make or break our democracy—it’s time to step up.

Here at Mother Jones, we promise we’ll do our part too. Our new disinformation team (brought about by support from readers!) will investigate the forces behind propaganda, our factcheckers will put reporters through the wringer to ensure you can trust what you read in Mother Jones, and our entire newsroom will zero in on the toughest and most important stories of the moment. We can do that because Facebook doesn’t hold our purse strings—readers do. It’s not easy operating this way (we’re biting our nails right now pushing toward our year-end fundraising goal) but it’s worth it to make sure no one can influence or compromise our reporting. If you can pitch in, please do so now. And may the new year bring sunshine and transparency.