<a href="http://www.istockphoto.com/portfolio/anyaberkut?facets=%7B%22pageNumber%22:1,%22perPage%22:100,%22abstractType%22:%5B%22photos%22,%22illustrations%22,%22video%22,%22audio%22%5D,%22order%22:%22bestMatch%22,%22filterContent%22:%22false%22,%22portfolioID%22:%5B7373136%5D,%22additionalAudio%22:%22true%22,%22f%22:true%7D">anyaberkut</a>/iStock

This story was first published in Pacific Standard.

In the wake of President-elect Donald Trump’s narrow upset victory last week, many journalists and critics have leveled a finger at Facebook, claiming the social network was partly to blame for the growing milieu of false and misleading “news” stories that only serve to insulate potential voters within an ideological cocoon of their own making.

As Facebook continues to influence voter behavior with each passing election, the rising tide of fake news poses an existential threat to conventional journalistic organizations. “This should not be seen as a partisan issue,” sociologist Zeynep Tufecki observed in the New York Times on Tuesday. “The spread of false information online is corrosive for society at large.”

Facebook founder and CEO Mark Zuckerberg flatly rejected the assertion that Facebook shaped the election. “Of all the content on Facebook, more than 99 percent of what people see is authentic,” he insisted; of course, the remaining 1 percent of users still encompass some 19.1 million people. Despite Zuckerberg’s denial, Facebook is now actively reassessing its role in distributing false information. And while the social media giant is taking tiny steps toward addressing the issue — excluding fake news sites from its advertising network, for one — it may take a renegade task force within Facebook itself to force how the company to truly understand its outsized influence on how Americans see the world at large.

Until technology companies cope with the structural sources of fake news, it’s up to the American people to rethink their consumption habits. That’s where Melissa Zimdars comes in. A communications professor at Merrimack College in North Andover, Massachusetts, Zimdar recently began compiling a list of “fake, false, regularly misleading and/or otherwise questionable ‘news’ organizations” in a widely shared Google Doc of “False, Misleading, Clickbait-y, and Satirical ‘News’ Sources.” It is a cheat sheet for media literacy in the Facebook age.

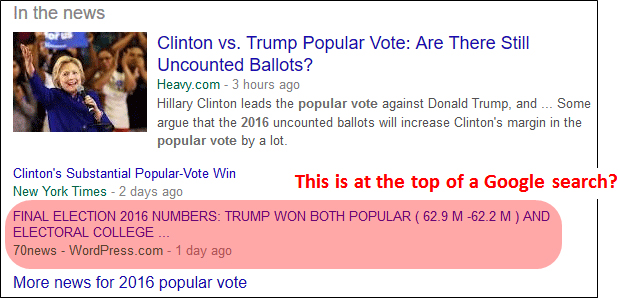

Zimdars’ viral guide — which encompasses websites from the outright fake (nbcnews.com.com) to the ideologically skewed (The Free Thought Project) to the clickbait-inflected (the Independent Journal Review)—began as a media literacy companion for her students. She decided to open-source the list after encountering an outright falsehood at the top of her Google News feed: that Hillary Clinton lost the popular vote.

“It’s a WordPress site! 70news.wordpress.com! And Google treated it like news!” Zimdars said when reached by phone on Tuesday. “That’s when I decided to make this public.” Pacific Standard spoke with Zimdars about fake news, Facebook, and the future of media literacy.

What inspired you to put this guide together?

I had been taking notes and making an unofficial list of questionable news sources to share with my students for the last few days, but I put in a lot of effort [on Monday]. The original impetus came from a general concern over the years about the sources students were using in their assignments or alluding to in their talking points. I say this not even as a reflection of where I currently teach; I’ve felt this way at every school I’ve worked at.

I strongly believe that media literacy and communication should be taught at a much younger age. Teachers don’t normally approach this content until the college level, and students continually have trouble determining what aspects of an article and website to examine to determine whether it’s actually something they want to cite or circulate.

There’s a wide variety of sites on your list.

The first category is sites that are created to deliberately spread false information. The 70news.wordpress.com site that was at the top of Google News searches about election results is an example. We don’t really know the intent of some false websites — whether they crop up to generate advertising revenue, or to simply troll people or for comedy purposes — but they all belong to one category: blatantly false.

The second category is websites or news organizations that usually have a kernel of truth to them, relying on an actual event or a real quote from a public official, but the way the story is contextualized (or not at all contextualized) tends to be misrepresentative of what actually happened. They may not be entirely false — there may be elements of “truthiness” to them — but they’re certainly misleading.

The third category I’ve used included websites whose reporting is OK, but their Facebook distribution practices are unrepresentative of actual events because they’re relying on hyperbole for clicks.

This category has caused the most controversy and, well, been taken as offensive to some publications. Upworthy wasn’t happy about its inclusion on this list; neither was ThinkProgress, who I initially included because of its tendency to use clickbait in its Facebook descriptions. A number of websites—both liberal and conservative publications—have contacted me; one even threatened to file “criminal libel” against me, although I don’t think they know what that means.

These websites are especially troubling because people don’t actually read the actual stories — they often just share based on the headline. I had the Huffington Post on my list of 300 potential additions because they published an article on Monday with a headline that claimed Bernie Sanders could replace Donald Trump with a little-known loophole. The article itself was chastising people for sharing the story without actually clicking it, but so many people were sharing it like, “oh, there’s a chance!” An effort to teach media literacy ended up circulating information that was extremely misleading.

How much of the rise of fake or misleading news sites can be attributed to structural changes in media consumption wrought by Facebook?

Facebook has absolutely contributed to the echo chamber. By algorithmically giving us what we want, Facebook leads to these very different information centers based on how it perceives your political orientation. This is compounded by the prior existence of confirmation bias: People have a tendency to seek out information they already agree with, or that matches with their gut reaction. When we encounter information we agree with, it affirms our beliefs, and even when we encounter information we don’t agree with, it tends to strengthen our beliefs anyway. We’re very stubborn like that.

I haven’t studied this yet, but my assumption is that this trend toward fake news reinforces this confirmation bias and strengthens the echo chamber and the filter bubble. It’s not just the media, but this weird relationship between how the technology works, this proliferated media environment, and how humans engage psychologically and communicatively.

Facebook is currently struggling with how to address these structural causes. What are some potential solution? I recently read a story about how a group of Princeton University students created an open-source browser extension that separates legitimate news sources from phony ones.

We definitely need media literacy from a young age, but that’s a very delayed process. We can use technology to try to help the situation, but after I read that same article about the Facebook plugin, a reporter from the Boston Globe and I were trying to test it and it didn’t seem to work. I’m glad it’s open source; a lot of programmers had approached me about creating something that people worried about misinformation can actively work on.

But my concern is, ironically, because I’m going through these sources and passing judgment, that as we’re doing this on a structural scale, what will be built as a check and balance for whatever method we end up using? How can some technology solution dynamic enough and reactive enough so that, if a website improves, or one that has a good reputation goes off the rails, it’s able to adapt? What are the metrics by which we’re categorizing news sources?

This seems like a good case for editors, which Facebook has been dealing with for some time.

Some people argue that part of this problem of fake news is inherently connected to editorial trends in mainstream journalism, from consolidation to a greater emphasis on corporate profits. Editing isn’t inherently a safety measure of this technology, even if it’s clearly necessary.

While tech companies grapple with structural issues, what needs to be done to engender media literacy in our classrooms and, I suppose, in our households?

It starts with actually reading what we are sharing. And it’s hard! Look, I’m a professor of media and I’ve been guilty of seeing something posted by a friend I trust and sharing it. I’ve been complicit in this system. The first thing we need to do is get people to actually read what they’re sharing, and, if it’s too much trouble to do that, we’re going to have serious difficulty getting people to look up and evaluate their sources of information.

One of the best things people can do is police websites that are spam or fake on Facebook. But when someone asked me about engaging with people, my advice was “do so with your own risk.” I’ve had tons of trolls and hateful messages and comments since I made this Google Doc public. You have to be prepared to deal with that stuff if you’re even going to try to course-correct misinformation on the Web.

So what, in an ideal world, is the solution here? What’s the future you envision for a cheat sheet like yours?

I think librarians should rule the world! I’ve been approached by people about creating more durable and dynamic documents that can go through a rigorous process to determine how resources are included or excluded or categorized. It’s like trying to index the entire Internet, and it feels impossible, but if we could start holding a few of the major sources of misinformation accountable, that would be important to me.