Mother Jones illustration

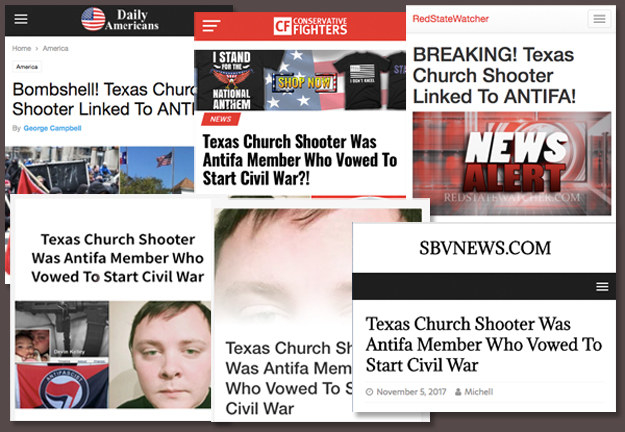

Just hours after the mass shooting at a church in Sutherland Springs, Texas earlier this month, conspiracy theories connecting the perpetrator, Devin Kelley, to far-left antifa groups started spreading rapidly online. What first began as speculation on Twitter started becoming a commonly accepted theory after being shared by some of the most high-profile conspiracy theorists on the right, including Mike Cernovich and InfoWars‘ Alex Jones. The theory gained so much traction that tweets promoting it appeared at the top of Google searches and typing in “Devin Kelley” led to auto-complete suggestions that included “antifa.”

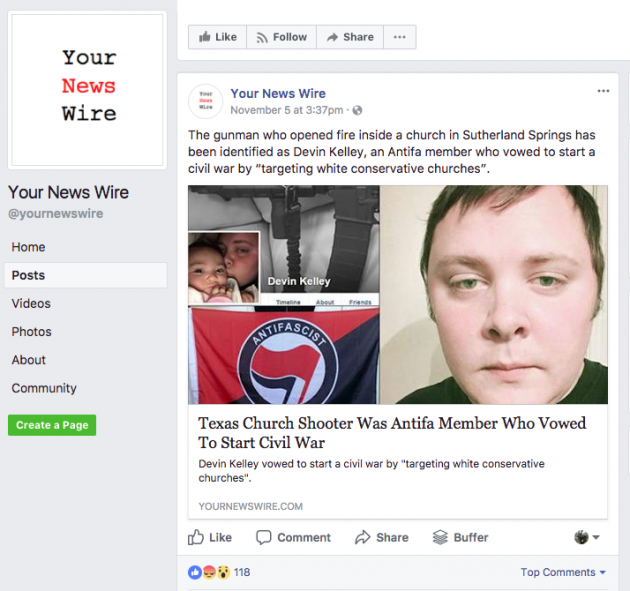

The day of the shooting, the connection also became the basis for a story by YourNewsWire. Though the website resembles a legitimate news site, it’s actually been known to repeatedly push false reporting in the past, including the infamous Pizzagate scandal last December. This specific story incorrectly claimed that Kelley was one of two shooters at the church and, through a supposed eyewitness account, falsely stated that Kelley had vowed to start a communist revolution. The site also created an image that showed a doctored version of Kelley’s Facebook account, with an antifa flag photoshopped in. The story and accompanying images were soon picked up by other outlets, including RT, and shared across social media platforms and on YouTube.

The Devin Kelley-antifa claims were almost immediately debunked, first in a Facebook post by Antifa United, which said the photo of the flag was taken from its online store, and soon after by BuzzFeed, Snopes, and PolitiFact. Nevertheless, YourNewsWire‘s story continued to make the rounds on social media for days after the shooting. Though some of the Facebook posts sharing the story are no longer available, the YourNewsWire story has been liked, commented, or shared on Facebook more than 267,000 times, far outnumbering engagement on any other platform, according to analytics site BuzzSumo.

Just how quickly—and prominently—this bogus story spread highlights how difficult it has been for social media companies, and Facebook specifically, to combat misinformation, despite high-profile, positive-PR-generating efforts to do so.

Last December, after initially downplaying the amount of misinformation that existed on the platform, Facebook announced it would partner with third-party organizations to fact-check stories posted to people’s feeds. But 11 months into the initiative, misinformation and conspiracy theories continue to proliferate and reach huge audiences on the platform, and Facebook has released very little data publicly to show how its efforts might be working. The result is an enterprise lacking transparency and a public still in the dark about whether the items on their news feeds is legitimate.

“The honest answer is that we don’t know [if the checking is working] because Facebook hasn’t shared real data about it,” says Alexios Mantzarlis, director of the Poynter Institute’s International Fact-Checking Network, which vets Facebook’s fact-checking partners. “Until we get a sense of what the traffic was for viral hoaxes before and after this tool was put into place, we can’t evaluate it. We can’t understand what’s happened.”

Buzzfeed compiled screenshots of sites that shared the Devin Kelley antifa story.

Buzzfeed

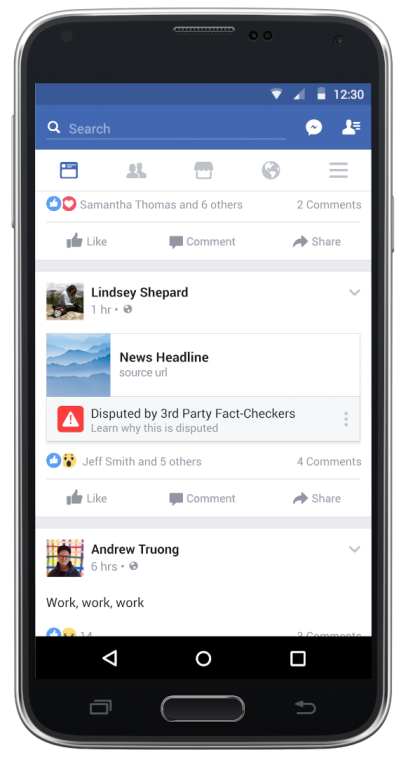

In announcing the initiative last year, Facebook developed partnerships with several media organizations, such as ABCNews, Snopes, PolitiFact, and Fact.Check.org, to fact-check stories on its platform. The way it works is fairly straightforward: Once Facebook determines a story might be false—either from a user reporting it, comments under the story, or other signals—it gets added to a dashboard accessible to fact-checkers at these organizations. After two fact-checkers mark a story as false, the story is visibly flagged for users on Facebook as “disputed by third-party fact-checkers.” Facebook’s algorithm starts demoting the story on news feeds, but does not remove content outright unless it violates community standards.

That’s more or less the extent of the public understanding. The only data Facebook has provided so far comes from an internal email leaked to BuzzFeed in October, which Facebook subsequently confirmed: Once a fact-checker rates a story as false, future impressions of the story are reduced by 80 percent. It’s unclear how many stories, however, have actually been disputed on the platform.

One of the issues the email highlights is that the process of surfacing a story and marking it as false can take more than three days. But as Jason White, who oversees Facebook’s news partnerships, wrote in the leaked email, “[W]e know most of the impressions typically happen in that initial time period.”

An example of a disputed story.

Since Facebook launched the fact-checking project, a number of critics have warned that the effort does not go far enough. A study from two Yale researchers recently argued that flagging a post as “disputed” does little to stop people from believing a story’s claims: Readers are only 3.7 percent less likely to believe a story after seeing the flag. (A Dartmouth study, however, found the effect to be somewhat larger.) The Yale study also noted that, in some cases, the “disputed” flag could even make some groups more willing to believe the story.

Even the journalists working as Facebook’s fact-checkers have expressed frustration with the lack of available data how their efforts might be minimizing the spread of false information, according to a recent Guardian article. “I don’t feel like it’s working at all. The fake information is still going viral and spreading rapidly,” said one fact-checker who spoke anonymously. “They think of us as doing their work for them. They have a big problem, and they are leaning on other organizations to clean up after them.” One source added it was rare for stories to get a “disputed” tag, even after a fact-check, according to the Guardian, and Facebook had not provided any data internally as to how often stories are actually getting tagged.

Making matters even more complicated is that several organizations known for peddling viral hoaxes are verified on Facebook, with a blue checkmark indicating that they’re an authentic public figure, media company, or brand. Individuals or media companies can request verification badges by providing government issued ID or tax documents. (Businesses are verified with a gray checkmark and have a simpler verification process, only requiring a publicly-listed phone number.) Even if these verification badges aren’t meant to endorse a publication’s content, Media Matters for America president Angelo Carusone argues that the badges can help facilitate the spreading of misinformation by making a page, and the information it shares, seem more authentic than it really is.

“Intuitively, it’s persuasive. People are going to see [these groups] as legitimate publishers,” says Carusone. “If you have a choice between two unknown publications, and one has a checkmark, most people are going to go with the one with a blue checkmark. We’ve been programmed to believe that verification implies some kind of credential, and I do believe that helps [fake news sites] grow.”

YourNewsWire, for instance, shared the Kelley-antifa story through one of its verified Facebook pages, “The People’s Voice,” garnering around 5,400 shares through that post alone. Though that post is no longer available, another post promoting the YourNewsWire story, posted from a different Facebook page, remains on the platform. YourNewsWire‘s Facebook page was also previously verified as a business, but has since lost its verification. (As of writing, Facebook had not responded to a inquiry asking why.)

A post from YourNewsWire that is still up on Facebook as of Nov 16.

Screenshot via Facebook

A screenshot of The People’s Voice Facebook page, which is listed as YourNewsWire‘s owner and regularly posts stories from the site.

Screenshot via Facebook

YourNewsWire‘s Facebook page, which used to be verified as a business.

Screenshot via Facebook

Twitter, notably, suspended all of its verification processes last week after criticism over its verification of Jason Kessler, a white nationalist blogger who organized the “Unite the Right” rally in Charlottesville, Virginia. “Verification was meant to authenticate identity & voice but it is interpreted as an endorsement or an indicator of importance,” the company’s support account tweeted last week. “We recognize that we have created this confusion and need to resolve it.” On Wednesday, Twitter modified its verification requirements and began removing verification badges from some users, including Richard Spencer, a prominent white nationalist, and Laura Loomer, a far-right commentator. The company says it will no longer accept requests for verification as it works on a “new program [it is] are proud of.”

A Facebook spokesperson tells Mother Jones that the company takes combating fake news seriously and there is no “silver bullet” to fighting misinformation. The spokesperson, who asked to be identified generally as a spokesperson per Facebook policy, mentioned that Facebook has introduced a number of initiatives in addition to partnering with third-party fact-checkers, including providing tips on how users can spot false information and showing a selection of related articles under a story to provide more context. And in August, Facebook said it would also block pages that repeatedly share false information from advertising on the platform. Though Facebook does not comment on specific pages, it says it does remove verification badges if a page violates one of Facebook’s policies.

The spokesperson also says Facebook is “encouraged by the continued success of [its] fact-checking partnerships” and that it will provide an update on the initiative before the end of the year. Beginning in 2018, it plans to send more regular updates to its partners.

“We are going to keep working to find new ways to help our community make more informed decisions about what they read and share,” the spokesperson tells Mother Jones.

Few experts advocate removing or censoring content outright, but argue that there’s still more Facebook could do. Media Matters’ Carusone says it could include disclaimer requirements for certain pages, for instance, or exercise more discretion in its verification process. Carusone doesn’t want censorship, he says, but insists that Facebook shouldn’t be privileging sites that are repeatedly abusing its algorithms. “They shouldn’t let bad actors cheat the system and create more chaos simply because they don’t want to be perceived as biased,” says Carusone.

Poynter’s Mantzarlis says that while it’s helpful that Facebook is “targeting the worst of the worst” and going after fully-fabricated content, the real issue is that it still needs to be much more transparent about its processes. He also points out that the fact-checking project doesn’t tackle content such as memes or videos, which is often how viral hoaxes are spread. Content on Instagram, which is owned by Facebook, may also have a far bigger influence in spreading misinformation than previously calculated.

“I think there are people at Facebook who care about this issue,” Mantzarlis says, “but until we see the data, we’re stuck.”