Protesters clash in Ferguson, MO, March 2015Jeff Roberson/AP

Even @jack got duped by them. Shortly after a Kremlin-run troll factory known as the Internet Research Agency was first exposed last October by Russian journalists, the Daily Beast reported that Twitter’s CEO, Jack Dorsey, had twice retweeted an account that claimed to be an African-American woman but was in fact operated by the IRA in St. Petersburg, Russia.

Dorsey retweeted content in March 2017 from @Crystal1Johnson that referenced #WomensHistoryMonth and said “Nobody is born a racist.” But @Crystal1Johnson was also highly active in a heated social-media war over racial justice and police shootings that played out during the 2016 presidential campaign. Now, new research out of the University of Washington shows the troll that duped Dorsey was among 29 known Russian accounts infiltrating both left-leaning and right-leaning sides of a Twitter melee that included shooting-related keywords and the hashtags #BlackLivesMatter, #BlueLivesMatter and #AllLivesMatter.

As Twitter shares more about the actions of thousands of known Russian accounts – including the company’s recent revelation to Congress that in the two months before the election, Russian-linked bots retweeted @realdonaldtrump 470,000 times vs. retweeting Hillary Clinton’s account fewer than 50,000 times – the UW research sheds further light on a deliberate strategy the Russian government used to play both sides of an emotional American debate.

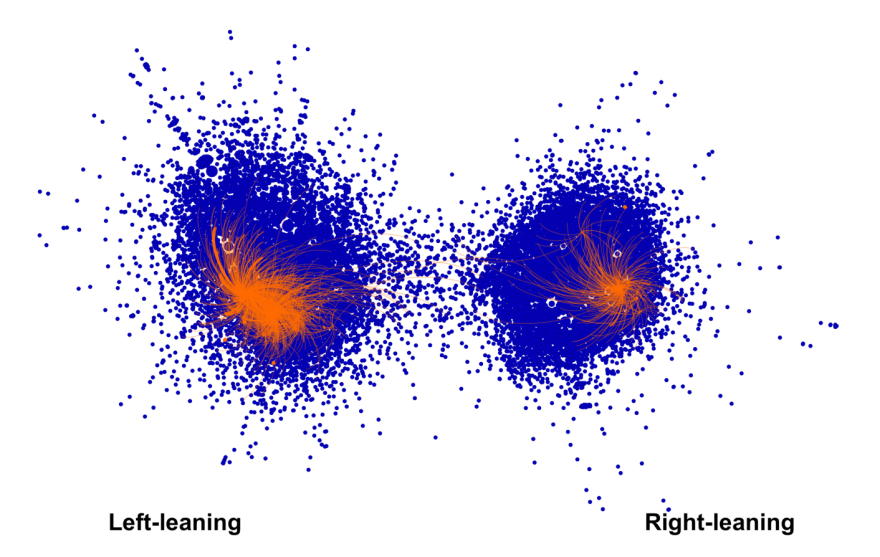

In data collected over a nine-month period ending in October 2016, the UW team found clearly defined left-leaning and right-leaning clusters—about 10,000 accounts in each sphere—tweeting about police shootings and including the three hashtags related to #BlackLivesMatter. Two of the Russian accounts found were among the top 12 most-retweeted accounts overall across all 20,000 accounts. “This suggests that troll content was relatively widely broadcasted in the contexts of this network,” the research team wrote in their findings. “On both sides, we see troll accounts gaining traction in polarized, audience-driven discourse.”

“It’s striking how systematic the trolls were,” says Ahmer Arif, one of the researchers on the study. The operation, he says, was sophisticated enough to exploit both sides: “The content they’re sharing is tailored to align to each audience’s preferences.”

Orange depicts every time a known Russian troll account in the dataset was retweeted around shootings and #BlackLivesMatter-related hashtags over a nine-month period ending in October 2016.

University of Washington Human Centered Design & Engineering Program

“It is important that we come to see online disinformation not just as a problem of the other ‘side’ but as something that is targeted at all of us,” UW professor Kate Starbird, who heads the project, told Mother Jones by email.

The new research builds on previous work by the UW team to analyze the divisive nature of the Twitter conversations around police-involved shootings. When the initial list of 2,752 Kremlin-linked account names was released last fall, the researchers recognized some of the handles and cross-referenced the list with their data.

Among those handles was @BleepThePolice, another highly retweeted troll account on the political left. The researchers say such names reflect the Russian operation’s media savviness and familiarity with U.S. domestic politics. “The trolls could blend in with the crowd fairly well,” says Leo Stewart, another researcher on the team. “You might think you could identify a troll account, but they were able to successfully create these personas.” (Russian accounts are hardly alone in creating the fake personas proliferating on Twitter; a New York Times investigation published Saturday, “The Follower Factory,” showed how an American company, Devumi, created 3.5 million automated bot accounts – with more than 55,000 of those appropriating the identities of real Twitter users – and sold them to customers like celebrities and politicians.)

Twitter recently updated their number of known IRA-linked accounts to 3,814 – plus 50,000 automated bot accounts they say are connected to the Russian government – but the list of additional account names has not been released. The UW team says that if additional names are revealed, they will continue to mine their 2016 data to better understand the trolls’ reach.

Twitter also has started informing 677,775 users that they interacted with Russian accounts – but Starbird says the company is missing an opportunity to provide more information about how users were manipulated on its platform. “Instead of sending a vague letter telling us that we interacted with some troll account, they could send personalized letters telling us exactly which accounts we interacted with, when, and how,” Starbird wrote via email. “This would be far more impactful in terms of how we understand propaganda and disinformation, and how we view our own role within the information space.”

Reached by Mother Jones, Twitter declined to make Dorsey available for comment and referred questions about its user-notification emails to the company’s recent blog post concerning the 2016 election. That post includes a screenshot of the @Crystal1Johnson account tweeting about police shootings on Sept. 21, 2016. UW researchers note that while the tweet is on the topic and within the time period they analyzed, it wouldn’t be captured in their data because it doesn’t include a hashtag related to #BlackLivesMatter. “We only have a slice of what’s being shared in this space,” Arif says.

Playing both sides of a political issue is a standard Russian propaganda tactic, national security experts say. When Congress released images from the troll factory’s Facebook campaigns last fall, the ads shown clearly targeted Black Lives Matter and LGBT audiences on the left, as well as gun-rights audiences on the right. “This is consistent with the overall goal of creating discord inside the body politic here in the United States, and really across the West,” former CIA officer Steven Hall told CNN at the time. “It shows the level of sophistication of their targeting. They are able to sow discord in a very granular nature, target certain communities and link them up with certain issues.”

In the UW team’s Twitter data from 2016, the researchers are still studying the content of the trolls’ tweets and the links that they included; their preliminary analysis shows the Russian accounts pushing out some of the most extreme content on each side. “There’s this caricaturing going on,” Arif says. “One of the effects of amplifying this type of content is it can undermine public discourse by constructing outrage.”

From Congress to the private sector, there is growing pressure on social media companies to take more aggressive actions against fake accounts; following the publication of the Times investigation, celebrity mogul Mark Cuban called on “@twitter to confirm a real name and real person behind every account.” To date, the company has said relatively little about any plans for combatting the problem.

https://twitter.com/mcuban/status/957686987229618176

In the meantime, researchers like those at UW are trying to shed more light: “Our goal is to help people understand how they’re being manipulated, but not necessarily give any prescriptive advice on how they should or shouldn’t engage in particular conversations,” Arif says. “It’s just to understand that there might be voices in these spaces who may not be who they seem, and that’s not just on one side of the political spectrum. And in some cases, these voices represent the most extreme.”