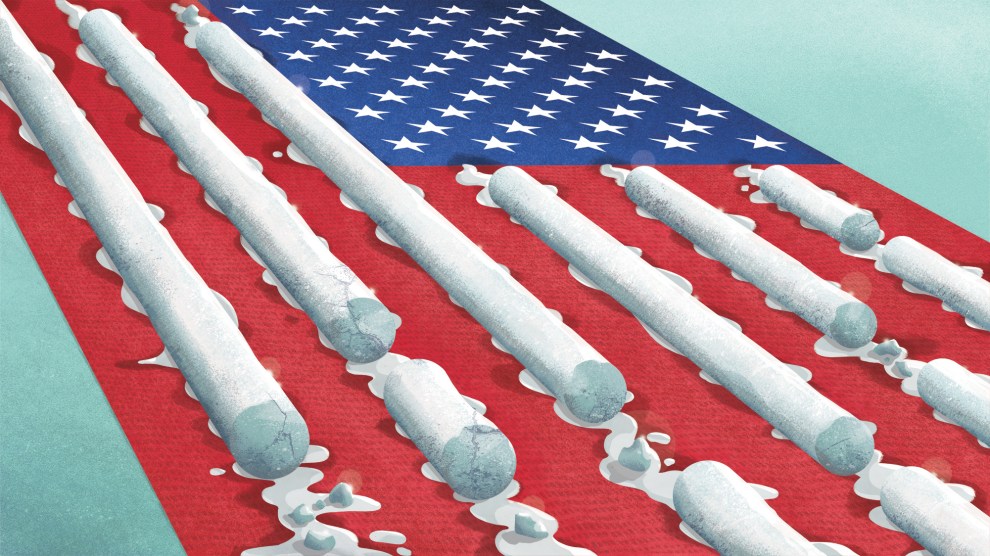

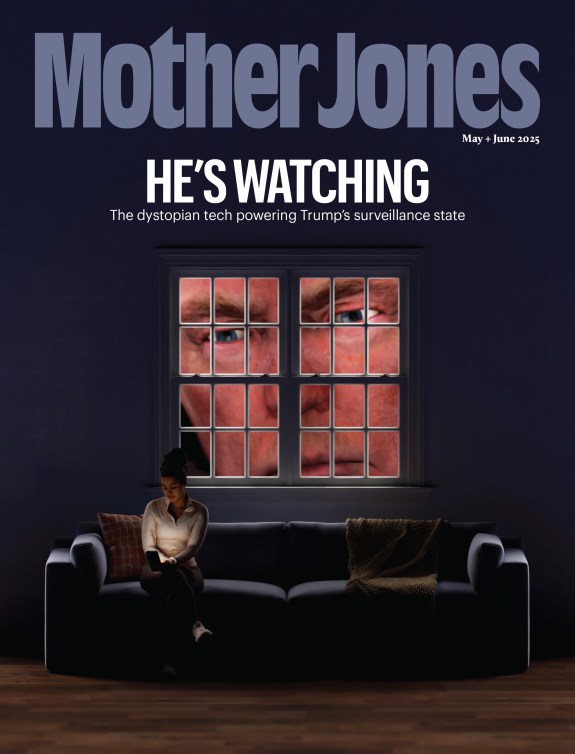

A T-Mobile job ad targeted at Facebook users ages 18 to 38 years old.Image from the lawsuit against T-Mobile and Amazon

Facebook has taken significant steps this year to address charges that its ad targeting tools have allowed advertisers for jobs, housing, and loans to discriminate against minority groups. But what if all Facebook ads in these categories are illegal? That’s the explosive claim made by attorneys in an age discrimination lawsuit that goes straight to the heart of Facebook’s billion-dollar business model.

Most advertisers can opt to advertise their products to specific audiences, excluding certain ages, genders, or races. For example, there’s no requirement that a fashion designer advertise men’s clothing to women. But ads in some industries are regulated by federal law. It is illegal, for example, to exclude women from employment ads. Under federal civil rights laws, ads for housing, employment, and loans cannot target or exclude certain races, genders, ages, religions, or other so-called protected characteristics.

Facebook has come under fire for enabling advertisers to select which demographics they want to reach with ads for homes, jobs, and credit. Under a settlement reached in March to resolve multiple lawsuits, Facebook agreed to limit the criteria advertisers could use to select an audience for ads in these categories in order to stop discriminatory ad targeting.

But a lawsuit that could go to trial this year argues that even when advertisers aren’t excluding certain groups, Facebook’s algorithms still deliver ads in a discriminatory way—in this case, by excluding older people. The lawsuit, from the Communications Workers of America, was actually not filed against Facebook, but against Amazon and T-Mobile, which the suit alleges purposefully excluded older people from seeing their Facebook ads. That’s because the algorithms are designed to deliver ads to the users most likely to click on them. Often, the lawsuit alleges, Facebook determines that older users are less like to be interested in a job and therefore prevents older users from seeing job ads, even when the advertisers do not exclude them.

The suit was initially filed in 2017, but it is still pending and could take on new significance in the wake of the settlement. Next week, Amazon and T-Mobile are expected to file their motion to dismiss. After that, federal judge Beth Labson Freeman will likely decide whether the case can proceed to trial later this year.

If it moves forward, the biggest consequences might not be for the defendants but for Facebook. Lawyers for the Communications Workers allege that even if Amazon and T-Mobile had not tried to direct their employment ads to younger audiences, as the lawsuit claims, Facebook’s algorithms would have done it for them.

“It’s no different than if you hire a recruiting firm and you know that that recruiting firm is going to disproportionately not recruit women or older people,” says Peter Romer-Friedman, an attorney at the employment firm Outten & Golden LLP who is representing the Communications Workers and three older plaintiffs in the suit against Amazon and T-Mobile. Romer-Friedman says the goal is for the court to prohibit Amazon and T-Mobile from advertising on Facebook until Facebook removes age from its ad delivery algorithms.

But the implications are much broader than one case and one protected class of workers. Researchers have found that Facebook displays ads along demographic lines in ways that discriminate. Facebook has so much data on every user that even if it doesn’t specifically include users’ age in its advertising algorithms, the algorithms can—and likely will—still sort people by age based on their likes, activities, purchases, friends, and other indicators. In other words, an algorithm without age data might still know that users who like the Jonas Brothers are more likely to click on an ad for a job at T-Mobile than someone who likes Bob Dylan, and direct that job ad to younger users.

Facebook has been wrestling with the problem of illegal ad targeting for three years. In the spring of 2016, ACLU attorneys realized that Facebook’s ad targeting tools, which allowed advertisers to select an audience by race, gender, age, and other protected characteristics, were available when placing ads for housing, jobs, and credit. This led to negotiations with Facebook and eventually to multiple lawsuits. In March 2019, Facebook settled multiple cases by agreeing to block advertisers from illegal targeting ads for housing, employment, and credit.

Later this year, Facebook will launch a new ad portal specifically for these three industries with scaled-back targeting options. Housing ads, for example, can no longer be sent only to white people; black Facebook users cannot be singled out for predatory loan ads.

But the settlement dealt with only half the problem. Advertisers can no longer discriminate when placing an ad. But there is still no mechanism to ensure that Facebook isn’t discriminating when it delivers the ad.

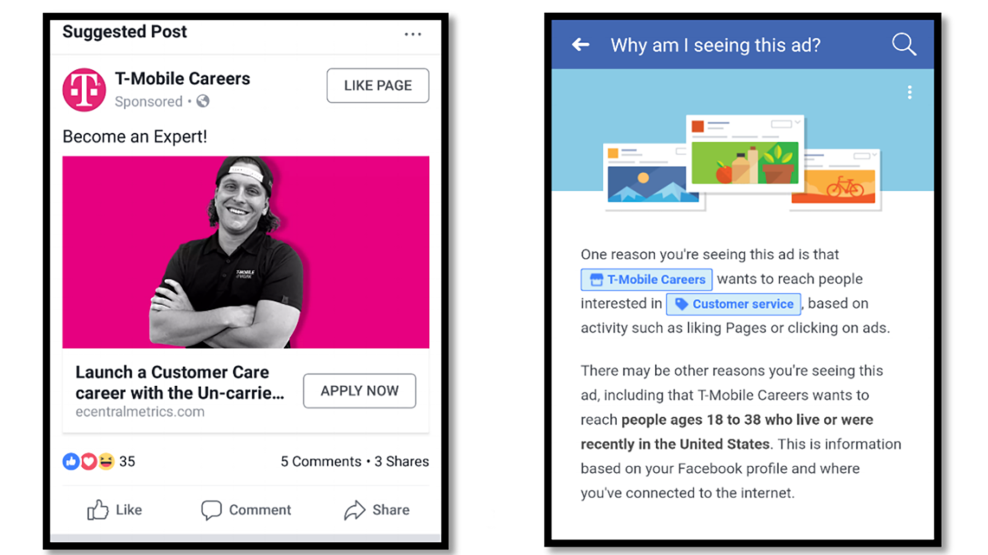

Before an ad reaches a Facebook user’s news feed—or reaches a user on Instagram, Messenger, or WhatsApp, all of which are owned by Facebook—it goes through two steps. The first is targeting, in which the advertiser selects its audience. The second is delivery, when Facebook takes over and chooses which users within the parameters set by the advertiser will actually see the ad. If an advertiser opts to send a computer programing job ad to people with a master’s degree, it almost certainly is not paying enough to send the ad to every Facebook user with a master’s degree. So Facebook picks which people with masters’ degrees will actually see the ad. And while the delivery process is a black box, researchers believe that Facebook is marshaling the vast data it has on every user to figure out who is most likely to click on the ad.

And those data almost certainly contain information like age and gender, as well as indicators of race, disability, religion, and national origin.

This spring, several academics partnered with Upturn, a nonprofit that studies the intersection of civil rights and technology, to test Facebook’s ad delivery. Despite selecting the same target audience for multiple employment ads, Facebook delivered ads for taxi drivers to an audience that was 75 percent black and ads for supermarket clerks to an audience that was 85 percent female. Among housing ads with the same targeting parameters, the researchers found significant skews: One reached an audience that was 85 percent white, another an audience that was just 35 percent white.

“Our best guess is that when an advertisement runs, Facebook’s computers look at the ad and say, ‘Based on ads like this that we’ve run in the past, what kind of person is most likely to click on it?’” says Aaron Rieke, managing director at Upturn and one of the study’s authors. “And our assumption is that in making that judgment, Facebook’s computer systems consider almost all the data it has available to it.”

Facebook has committed to studying the issue of algorithmic bias, as part of both its March ad discrimination settlement and an ongoing audit of the civil rights impact of the company’s policies. But it has not committed to making any changes to its algorithms.

The plaintiffs suing Amazon and T-Mobile aren’t the first people to raise this issue. In March, the Department of Housing and Urban Development charged Facebook with discriminatory practices in housing ads, initiating a process for adjudicating the discrimination claim before an administrative law judge within HUD. Even if an advertiser tried to target a housing ad to a diverse audience, HUD alleged, Facebook’s ad delivery system would override that request by delivering the ad more narrowly to the people in that audience it calculates are most likely to click on the ad.

Facebook disputed the allegation at the time. “HUD had no evidence and finding that our AI systems discriminate against people,” the company told ProPublica.

Judge Freeman may not ultimately consider this issue of Facebook’s algorithms, since it is not the main focus of the case. But sooner or later, a court is likely to rule on how much demographic skewing in ad delivery is allowed under the law.

“My crystal ball prediction is that sometime in the next five years, one of these cases will get far enough where a court is going to be faced with crafting some sort of answer to this question,” says Rieke. “It seems impossible that there wouldn’t be a case that will have to grapple with this sometime soon.”