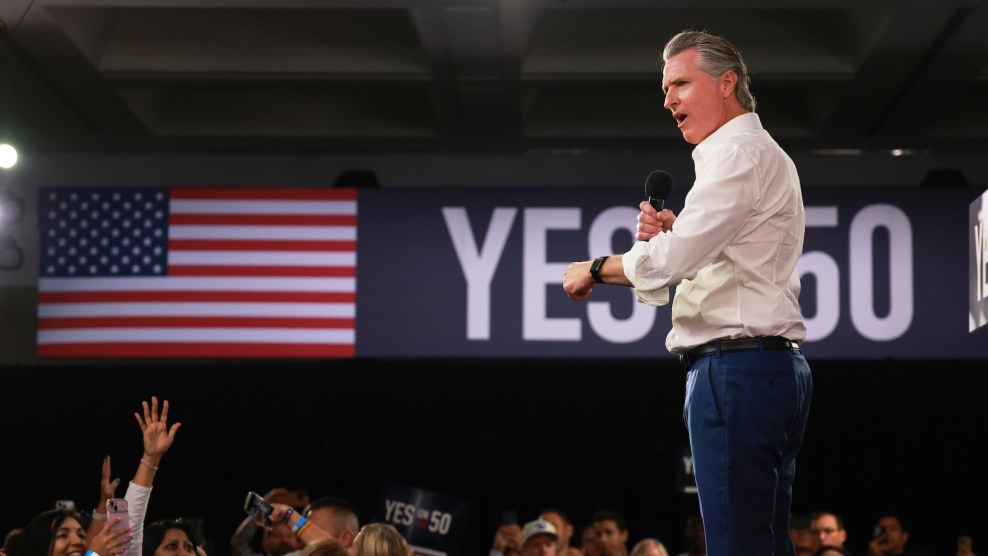

Facebook CEO Mark Zuckerberg testifies before the House Judiciary Subcommittee on Antitrust, Commercial and Administrative Law on July 29, 2020. MANDEL NGAN/POOL/AFP via Getty Images

Last year, Samantha Liapes wanted to buy insurance. She needed insurance for her home. She needed insurance for her car. And she needed life insurance. These financial products can prevent financial disaster and protect loved ones. They also come at varying price points. For consumers, the insurance market can be confusing—it can be hard to know how to even start looking for a policy or what rates are possible. To reach potential customers, the industry has turned to one of the world’s largest advertising platforms: Facebook.

But according to lawyers representing Liapes and other California residents, Facebook hasn’t been showing Liapes insurance ads. The reason, they allege in a lawsuit, is that Facebook pushes insurance ads away from women and older users, including Liapes. Facebook denies any discrimination in this case—but it asserts that as a digital platform, it has the legal right to send different ads to people of different ages and genders.

The Liapes case is one of many in recent years that has tried to change how Facebook serves ads to its users in areas covered by state and federal civil rights laws, including housing, employment, and financial services. It’s also part of a larger question confronting Silicon Valley: Are internet platforms subject to the civil rights laws that govern other businesses? A restaurant cannot offer women one menu and men another. When it comes to certain ads, can Facebook offer some opportunities to men but not women?

Offline, this kind of discrimination is illegal. At the federal level, laws dating to the 1960s and 1970s ban discrimination in opportunities for housing, employment, and credit. But some state public accommodations laws go much further, protecting more people against a wider range of discrimination outside these select areas. “Public accommodation laws are some of the most important civil rights laws out there,” says David Brody, senior counsel at the Lawyers’ Committee for Civil Rights Under Law and the leader of its Digital Justice Initiative. “They were a cornerstone of reducing Jim Crow segregation.” These days, Brody says, public accommodations laws “can have a very big role in preventing and reducing that type of online segregation and redlining.” Brody and the Lawyers’ Committee submitted an amicus brief supporting Liapes’ suit against Facebook.

For more articles read aloud: download the Audm iPhone app.

The case, filed last year, alleges that the social media giant is violating California’s sweeping public accommodations law, the Unruh Civil Rights Act, by directing ads for insurance policies away from women and older users. Depriving these users of equal access to insurance products, the suit argues, constitutes gender and age discrimination. Facebook’s business model relies on its ability to direct ads to users who will click on them. If Facebook is targeting more insurance ads to men and younger users—which discovery and a trial could help determine—it’s likely because the company’s algorithm has found they are more likely to click on the ad.

“There are many, many ad campaigns for economic opportunities on Facebook, where Facebook, and sometimes Facebook and their advertisers, are using gender and age to decide that women and older people will be denied advertisements for these economic opportunities,” says Peter Romer-Friedman, one of the lawyers who filed the suit. “By denying that service, because of gender and age, Facebook is clearly providing inferior and discriminatory services for women and older people.” Facebook rejects this allegation and is asking the judge to toss out the case. A hearing on the company’s motion is scheduled for mid-June. The company did not respond to a request for comment from Mother Jones.

Not all state public accommodations laws apply to online businesses. But California’s does. That would seem to make this a clear-cut case about the facts: Are the ads indeed being directed away from women and older people, and if so, is Facebook doing so purposefully? But Facebook’s defense strikes at a key tension that will help determine the fairness of internet platforms going forward. The company argues that not only is it not discriminating, but that it is immune from the inquiry altogether. The argument arises from Section 230 of the federal government’s 1996 Communications Decency Act, which immunizes internet publishers from liability over what third parties post on their platforms. Facebook, the company’s lawyers argue, is therefore immune from claims of discrimination in how it delivers its ads because ads are third-party content.

“I think the future of the internet is at stake in this case and in other cases that are outlining the scope of liability for discriminating against a platform’s users,” says Romer-Friedman. Certainly, if the courts take this case and others like it seriously, it could augur serious changes in how Facebook and other platforms that target personalized ads do business.

To understand the allegations against Facebook, it’s important to understand Facebook’s ad delivery process. The lawsuit lists 14 ads that Liapes, who was 46 when the suit was filed, says she did not receive—allegedly because of her age and gender. Facebook disputes this. “If, as Plaintiff alleges, she was interested in insurance ads on Facebook but did not receive those” listed in the complaint, the company’s lawyers wrote in response, “the most reasonable inference is that the algorithm delivered other insurance ads to her News Feed or determined that she would find other types of ads even more interesting.”

Facebook’s ad targeting process has two steps, and the suit alleges discrimination in both. In the first stage, the advertiser selects a target audience using Facebook’s tools. One option is for the advertiser to set parameters for the ads it wants to place on Facebook. It can do this by selecting targeting criteria, such as users’ geographic location and interests, as well as age and gender. Last August, Facebook eliminated the option of targeting ads by “multicultural affinity,” a category that Facebook never defined but was viewed as a broad proxy for race. However, other potential proxies for race, such as zip code, remain available to advertisers. Alternatively, an advertiser can upload the contact information for its existing customers and ask Facebook to find similar users to advertise to based on the characteristics of its current client base, a feature called Lookalike Audiences. Once this is done, the advertiser’s role is complete.

The second step is directed entirely by Facebook. Facebook uses its own ad delivery algorithm to distribute the ads within the advertiser’s selected audience. This algorithm employs thousands of inputs to send an ad to the people Facebook calculates are most likely to engage with that ad—and its ability to precisely target users is what makes placing ads on Facebook so appealing to businesses and so lucrative for Facebook. Last year, the company made a total of nearly $86 billion, of which $84 billion came from selling ads.

The suit alleges that Facebook discriminates in the first step by encouraging insurance advertisers to limit the ad’s audience by gender and age, and by applying gender and age when developing Lookalike Audiences. In the second phase, the suit claims, Facebook’s ad delivery algorithm uses age and gender to direct insurance ads away from women and older users, even if the advertiser hadn’t placed those limits in the first stage.

Whether Facebook’s delivery algorithm is sending insurance ads away from women and older people is hard to prove because there’s no transparency into the algorithm itself. Instead, civil rights groups and researchers learn about the algorithm by running tests in which they buy ads, do not select any age or gender targeting, and then watch to see whether the ads are delivered with an age or gender skew. Multiple such studies have found that the delivery algorithm does indeed discriminate.

Facebook’s algorithm learns from its users. If men click more on an ad, it learns to send the ad to men to maximize engagement. “In these areas where the advertisements are for important life opportunities, and we’re trying to change really biased demographic patterns in society, their algorithms shouldn’t be tuned just to go along with that,” says Aaron Rieke, managing director at Upturn, a nonprofit that promotes civil rights in technology and filed an amicus brief in this case in support of the plaintiffs.

In 2019, Upturn partnered with researchers at Northeastern University and the University of Southern California to study the ad delivery algorithm. Ads the researchers placed for jobs in the lumber industry reached 90 percent men, while 85 percent of ads for supermarket cashiers were delivered to women. The researchers had specified the same audience for both ads, meaning Facebook’s ad delivery algorithm created the gender skew. Earlier this year, researchers at the University of Southern California again placed job ads on Facebook with the same targeting parameters. Again, Facebook’s delivery showed a gender skew that mirrored real world disparities.

In the California case, Facebook argues that just because studies have shown a gender skew on test ads doesn’t mean that skew shows up in job ads generally or in other kinds of ads. Researchers think this is unlikely. Their findings suggest “this kind of thing likely holds for other types of ads,” says Aleksandra Korolova, a computer scientist at USC who participated in the 2019 and 2021 ad studies.

Allegations of discrimination in Facebook’s ad targeting are not new. For years, advertisers could use “multicultural affinity” or “ethnic affinity” labels, as well as age, gender, and other legally protected characteristics, to target ads. In the spring of 2016, lawyers at the ACLU discovered that Facebook was allowing advertisers to target ads for housing, jobs, and credit opportunities using these traits, even though discrimination in such ads is prohibited by federal law. A ProPublica exposé about the problem that fall put public pressure on Facebook to change its ad tech. Despite the uproar, reporters continued to discover ways that ads could discriminate in areas covered by federal civil rights statutes. Ultimately, real change came from lawsuits. In March 2019, Facebook settled three suits and two Equal Employment Opportunity Commission complaints by promising to create a new ad-buying portal for housing, employment, and credit ads. Advertisers placing these ads would no longer be able to target based on multicultural affinity, gender, age, and other protected characteristics. It would also remove these characteristics from its Lookalike Audience tool for these types of ads. Facebook never acknowledged wrongdoing.

While the settlement included ads for loans and mortgages, it didn’t include other financial services, including banking opportunities and insurance. Addressing any discrimination in these areas would have required the application of state public accommodation laws. The settlement also didn’t touch on Facebook’s own ad delivery algorithm, instead focusing on the first step of ad buying, in which the advertiser selects its audience. While important, the settlements left plenty of room for bias in how ads are selected and delivered.

Before the settlement was reached, Facebook used the same defense against allegations of housing discrimination that it is now using in the case over insurance discrimination: that it is immunized from the claims by Section 230. In one sense, the company has a point. What is being delivered is third-party content—ads—and Facebook is immune from liability for such material. But this is also a broad reading of Section 230 that opens up the lucrative and pervasive world of online ad targeting to unchecked discrimination.

This argument also misses the point of the suit. While ads are third-party content, the ads themselves are not the problem. The problem is in the way they are allegedly delivered, and both phases of the delivery system are determined by Facebook. That’s especially true for Facebook’s ad-delivery algorithm. “This is not a situation where you’re on the hook for something someone else did,” says Rieke. “That’s what Section 230 is supposed to protect you from. This is the consequence of your own voluntary profit maximization decisions.”

Still, Facebook argues that its own decisions about how to target ads—including in the delivery phase where the advertiser is not involved—is protected. “A website’s decisions to suggest or match third-party content to users reflect editorial decisions that are protected publisher functions under the CDA,” the company told the court in asking for the California case to be thrown out. “Courts have determined that the CDA applies even if those decisions are challenged as discriminatory.”

Romer-Friedman and civil rights advocates want the court to see it differently. “All an algorithm is is a set of instructions,” he says. “An algorithm could say: Don’t show any housing ads to black people, don’t show any job ads to women. Just because you call it an algorithm doesn’t mean it’s not discriminatory in an intentional, unlawful way.”

The case comes at what Rieke describes as the height of Section 230’s protections, after courts have expansively interpreted the law. But it also comes at a time of increased scrutiny toward Section 230 from lawmakers, both Democrats and Republicans. In February, four Democratic senators introduced legislation, called the SAFE TECH Act, that would remove Section 230 protection in civil rights cases like the California one.

Actually eliminating bias from ad targeting, in particular from Facebook’s algorithms, is a messy road that tech companies do not want to go down. Perhaps most obviously, changes to its ad targeting would likely affect Facebook’s bottom line. Korolova points out that it took Facebook three years and multiple lawsuits to change just how advertisers for housing, credit, and jobs could target their content. It has been two years since studies showed gender skews in Facebook’s ad delivery algorithm, yet researchers are not aware of any effort by Facebook to address the problem. “If they’re not making changes, then it suggests that this could be tied up with their business interests,” she says.

A second explanation may be that acknowledging bias, particularly in its own algorithms, could become a Pandora’s Box that Facebook does not want to open. The California lawsuit asks Facebook to remove the gender and age categories from its targeting of insurance ads. But Rieke doubts that would solve the problem, particularly when it comes to Lookalike Audiences in the first phase and, in the second phase, Facebook’s own delivery algorithm. There isn’t just a single input field telling the ad delivery algorithm a user’s gender or age, but tens of thousands of inputs that correlate with gender and age. In fact, he notes, when Facebook removed those classifications in the housing, job, and credit ad Lookalike Audience tool as a result of the 2019 settlement, Upturn found that the skews in those audiences didn’t change.

The difficulty of removing the bias that is allegedly baked into the algorithms gives a sense of why Facebook has refused to change it for years, and repeatedly hides behind Section 230 in court. According to Rieke and Korolova, there are a few options to actually remove bias from Facebook’s own algorithms. First, Facebook could rebalance its ad delivery in areas protected by civil rights laws. For example, after its ad delivery algorithm selects an audience with a gender skew for a job ad, Facebook could automatically rebalance the target audience to eliminate bias. A second option would be to distribute the ad randomly within the advertiser’s selected audience, without using in Facebook’s delivery algorithm. Third, Facebook could remove these ads from people’s News Feeds and instead promote them as listings in a Craigslist-style tab, where anyone interested in ads for jobs, housing, or financial services could browse on equal footing.

It’s possible that neither Congress nor the courts will make Facebook and other tech companies liable for bias in their ad targeting systems. But maintaining the status quo would perpetuate an unequal internet in which some people receive better economic opportunities than others.

“What is the cost of Facebook?” asks Brody of the Lawyers’ Committee. “You’re not paying with dollars, you’re paying with your data. What Facebook is doing is for some users, they are getting more when they pay that cost with their data, versus other users who are getting less when they pay that cost. It’s not a free service. It’s a barter.”